Asplos23 Session 5c Flat An Optimized Dataflow For Mitigating Attention Bottlenecks

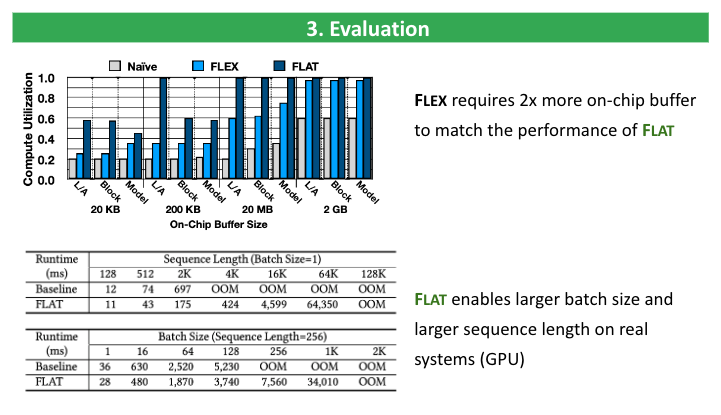

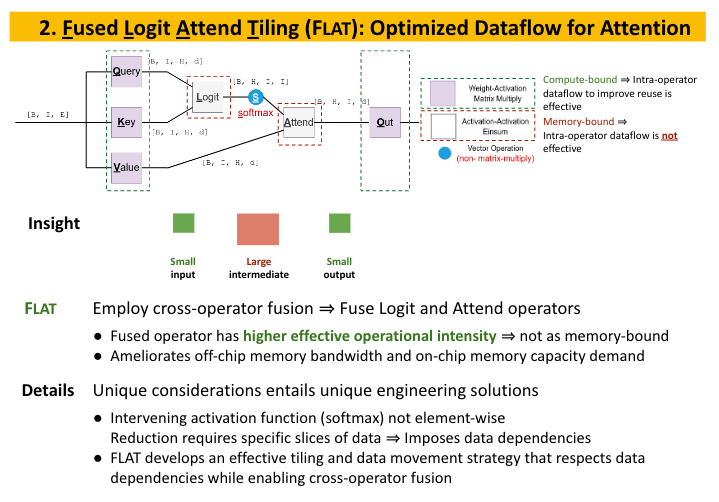

Flat Attention Web 4 flat dataflow concept. we design a specialized dataflow, fused logit attention tiling (flat), targeting the two memory bw bound operators in the atten tion layer, l and a. flat includes both intra operator dataflow and a specialized inter operator dataflow, executing l and a in concert. To realize the full potential of this bespoke mechanism, we propose a tiling approach to enhance the data reuse across attention operations. our method both mitigates the off chip bandwidth bottleneck as well as reduces the on chip memory requirement. flat delivers 1.94x (1.76x) speedup and 49% and (42%) of energy savings compared to the state.

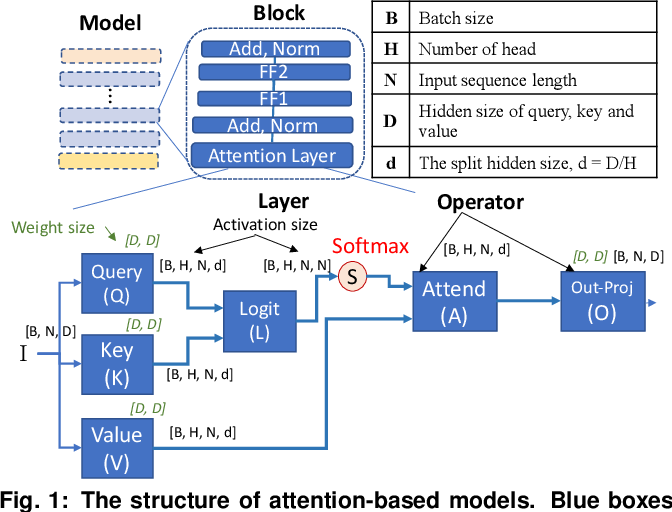

Asplos 23 Session 5c Flat An Optimized Dataflow For Mitigatin This work addresses these challenges by devising a tailored dataflow optimization, called flat, for attention mechanisms without altering their functionality. this dataflow processes costly attention operations through a unique fusion mechanism, transforming the memory footprint quadratic growth to merely a linear one. Asplos'23: the 28th international conference on architectural support for programming languages and operating systemssession 5c: machine learningsession cha. Attention mechanisms, primarily designed to capture pairwise correlations between words, have become the backbone of machine learning, expanding beyond natural language processing into other domains. this growth in ada…. Evaluation. capacity demand. not element wise. engineering solutions. reduction requires specific slices of data. ⇒ imposes data dependencies. flat develops an effective tiling and data movement strategy that respects data dependencies while enabling cross operator fusion. 4. impact and implications.

Figure 1 From An Optimized Dataп Ow For Mitigating Attention Performance Attention mechanisms, primarily designed to capture pairwise correlations between words, have become the backbone of machine learning, expanding beyond natural language processing into other domains. this growth in ada…. Evaluation. capacity demand. not element wise. engineering solutions. reduction requires specific slices of data. ⇒ imposes data dependencies. flat develops an effective tiling and data movement strategy that respects data dependencies while enabling cross operator fusion. 4. impact and implications. Kao, sheng chun, subramanian, suvinay, agrawal, gaurav, yazdanbakhsh, amir and krishna, tushar. 2023. "flat: an optimized dataflow for mitigating attention bottlenecks.". Search acm digital library. search search. advanced search.

Asplos 23 Flat An Optimized Dataflow For Mitigating Attention Kao, sheng chun, subramanian, suvinay, agrawal, gaurav, yazdanbakhsh, amir and krishna, tushar. 2023. "flat: an optimized dataflow for mitigating attention bottlenecks.". Search acm digital library. search search. advanced search.

Flat Attention Web

Comments are closed.