Conjugate Gradient Method Part 1

Chapter 5 Conjugate Gradient Methods Introduction To Mathematical 14. the nonlinear conjugate gradient method 42 14.1. outline of the nonlinear conjugate gradient method 42 14.2. general line search 43 14.3. preconditioning 47 a notes 48 b canned algorithms 49 b1. steepest descent 49 b2. conjugate gradients 50 b3. preconditioned conjugate gradients 51 i. Chapter 5. conjugate gradient methods. this chapter is dedicated to studying the conjugate gradient methods in detail. the linear and non linear versions of the cg methods have been discussed with five sub classes falling under the nonlinear cg method class. the five nonlinear cg methods that have been discussed are: flethcher reeves method.

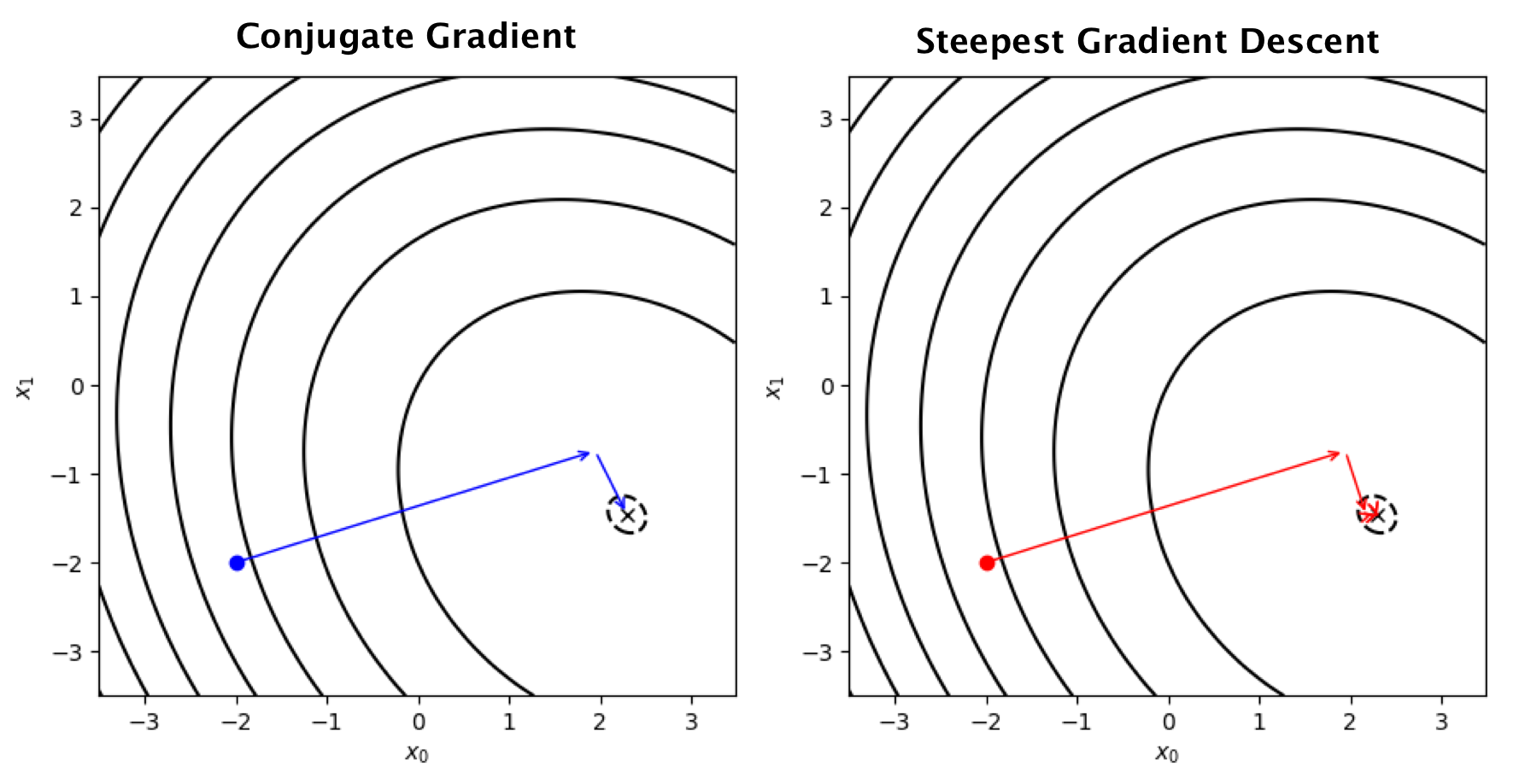

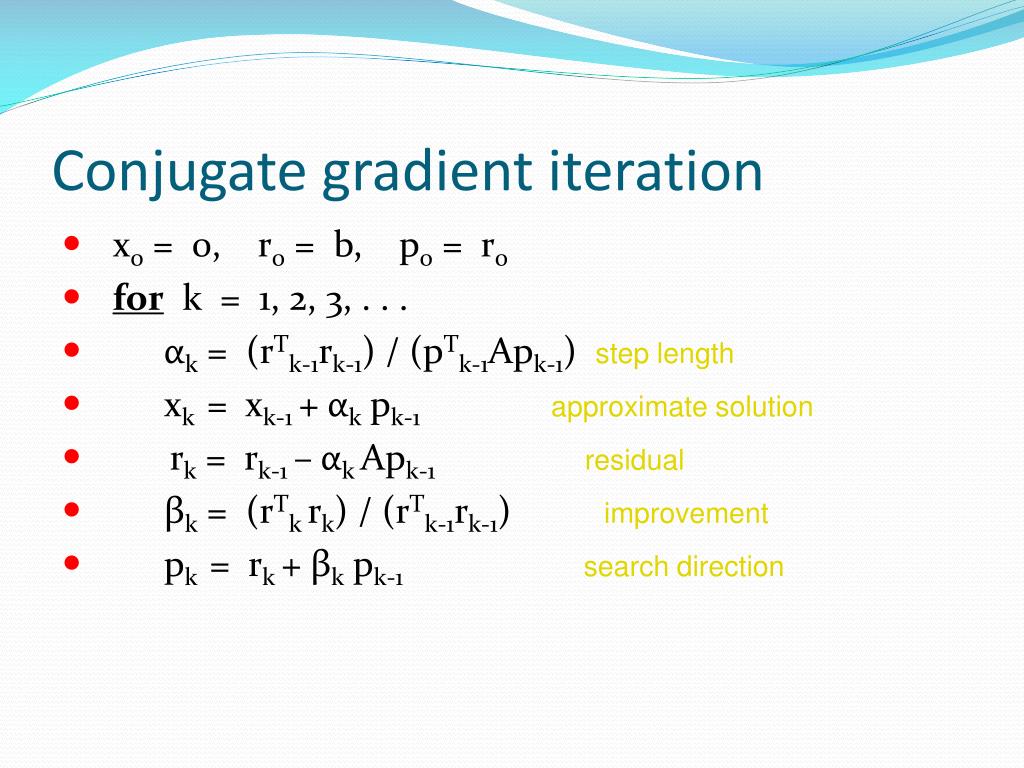

Conjugate Gradient Methods Cornell University Computational Conjugate gradient, assuming exact arithmetic, converges in at most n steps, where n is the size of the matrix of the system (here n = 2). in mathematics, the conjugate gradient method is an algorithm for the numerical solution of particular systems of linear equations, namely those whose matrix is positive semidefinite. Idea: apply cg after linear change of coordinates x = t y, det t 6= 0. use cg to solve t t at y = t t b; then set x⋆ = t −1y⋆. t. or m. = t t t is called preconditioner. in naive implementation, each iteration requires multiplies by t (and a); also need to compute x⋆ = t −1y⋆ at end and t t. The conjugate gradient method is often implemented as an iterative algorithm and can be considered as being between newton’s method, a second order method that incorporates hessian and gradient, and the method of steepest descent, a first order method that uses gradient. newton's method usually reduces the number of iterations needed, but the. −1. if we had to do that, then conjugate gradient would not be efficient—it would take ( ) flops at the th iteration! proof. (1) =⇒(2):note that being the minimizer of ( ) on the hyperplane 0 ( ; 0) means that the gradient ∇ ( ) must be perpendicular to the subspace ( ; 0). but the gradient is just −.

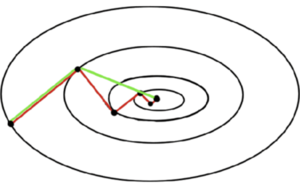

Blog Conjugate Gradient 1 Pattarawat Chormai The conjugate gradient method is often implemented as an iterative algorithm and can be considered as being between newton’s method, a second order method that incorporates hessian and gradient, and the method of steepest descent, a first order method that uses gradient. newton's method usually reduces the number of iterations needed, but the. −1. if we had to do that, then conjugate gradient would not be efficient—it would take ( ) flops at the th iteration! proof. (1) =⇒(2):note that being the minimizer of ( ) on the hyperplane 0 ( ; 0) means that the gradient ∇ ( ) must be perpendicular to the subspace ( ; 0). but the gradient is just −. Conjugate gradient for solving a linear system. consider a linear equation ax = b where a is an n × n symmetric positive definite matrix, x and b are n × 1 vectors. to solve this equation for x is equivalent to a minimization problem of a convex function f(x) below. Convergence analysis of conjugate gradients 32 9.1. picking perfect polynomials: 33 9.2. chebyshev polynomials: 35 10. complexity 37 11. starting and stopping 38 11.1. starting: 38 11.2. stopping: 38 12. preconditioning 39 13. conjugate gradients on the normal equations 41 14. the nonlinear conjugate gradient method 42 14.1. outline of the.

Conjugate Gradient Method Youtube Conjugate gradient for solving a linear system. consider a linear equation ax = b where a is an n × n symmetric positive definite matrix, x and b are n × 1 vectors. to solve this equation for x is equivalent to a minimization problem of a convex function f(x) below. Convergence analysis of conjugate gradients 32 9.1. picking perfect polynomials: 33 9.2. chebyshev polynomials: 35 10. complexity 37 11. starting and stopping 38 11.1. starting: 38 11.2. stopping: 38 12. preconditioning 39 13. conjugate gradients on the normal equations 41 14. the nonlinear conjugate gradient method 42 14.1. outline of the.

Conjugate Method Template

Comments are closed.