How Should Ai Be Regulated To Mitigate Security Risks Portnox

How Should Ai Be Regulated To Mitigate Security Risks Portnox Certification and standards. regulatory efforts should include establishing certification processes and standards for ai systems. these standards should guide the design, development, deployment, and maintenance of ai in cybersecurity. they should cover aspects such as data privacy, transparency, accountability, and robustness of the ai system. Continuous training and updates: ensure that ai models used for cybersecurity are continuously trained with the latest threat intelligence to stay ahead of emerging threats. collaboration with ai experts: foster partnerships with ai specialists to enhance the organization’s defensive capabilities. 3. cloud security vulnerabilities challenge:.

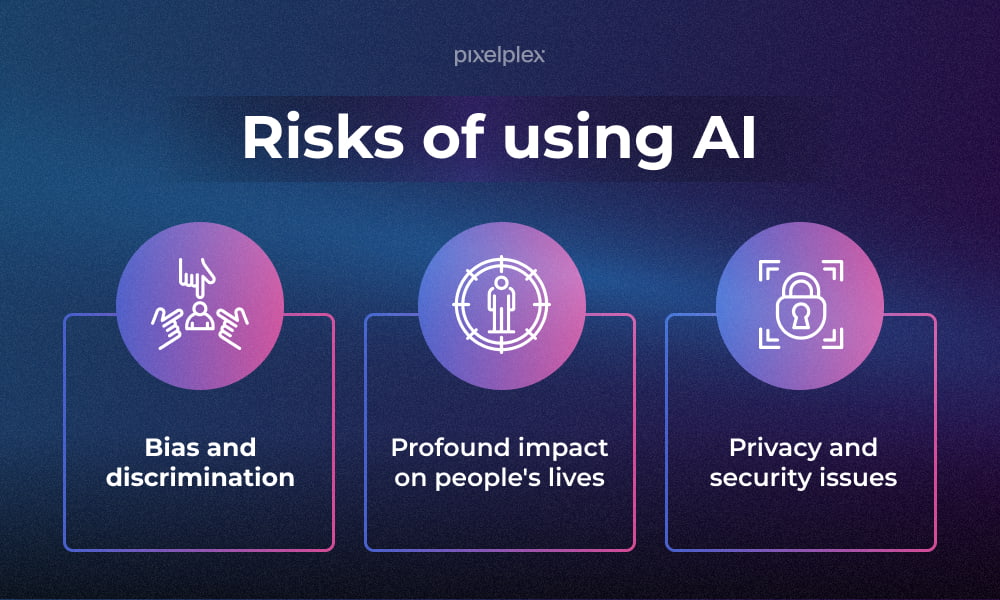

Ai Regulation How Is This Tech Regulated Globally And Why The eu’s ai act. the european union is finalizing the ai act, a sweeping regulation that aims to regulate the most “high risk” usages of ai systems. first proposed in 2021, the bill would. There are tests that judge ai on solving puzzles, logical reasoning or how swiftly and accurately it predicts what text will answer a person's chatbot query. those measurements help assess an ai. The truth is that none of these tools alone can sufficiently mitigate the risks of generative ai. combinatory approaches of technical and socio technical tools are needed, varying depending on the use case, organization and its resources (know how, financial) and product. the next crucial step is to trial these solutions in practice. Ai is often described as a “black box,” but the regulations, frameworks, and guidelines surrounding it can seem just as mystifying. a new report provides clarity. responsible ai (rai) helps organizations reduce risks and build trust around ai. but getting rai right depends on knowing how different governance mechanisms fit together.

Should Ai Be Regulated Exploring The Benefits Risks And Implications The truth is that none of these tools alone can sufficiently mitigate the risks of generative ai. combinatory approaches of technical and socio technical tools are needed, varying depending on the use case, organization and its resources (know how, financial) and product. the next crucial step is to trial these solutions in practice. Ai is often described as a “black box,” but the regulations, frameworks, and guidelines surrounding it can seem just as mystifying. a new report provides clarity. responsible ai (rai) helps organizations reduce risks and build trust around ai. but getting rai right depends on knowing how different governance mechanisms fit together. Ryan naraine. april 29, 2024. the us government’s cybersecurity agency cisa has rolled out a series of guidelines aimed at beefing up the safety and security of critical infrastructure against ai related threats. the newly released guidelines categorize ai risks into three significant types: the utilization of ai in attacks on infrastructure. Here are some strategies for ai model implementation: • implement ai model monitoring and security evaluations. by doing this, organizations can stay vigilant against potential threats, identify.

Comments are closed.