How To Fine Tune A Model And Export It To Ollama Locally

How To Run Custom Fine Tuned Llama2 Model Into Ollama в Issue 765 This video is a step by step easy tutorial to fine tune a model on custom data and then export it to ollama using unsloth.🔥 buy me a coffee to support the c. Fine tune a llama 3 model on a medical dataset. merge the adapter with the base model and push the full model to the hugging face hub. convert the model files into the llama.cpp gguf format. quantize the gguf model and push the file to hugging face hub. using the fine tuned model locally with jan application.

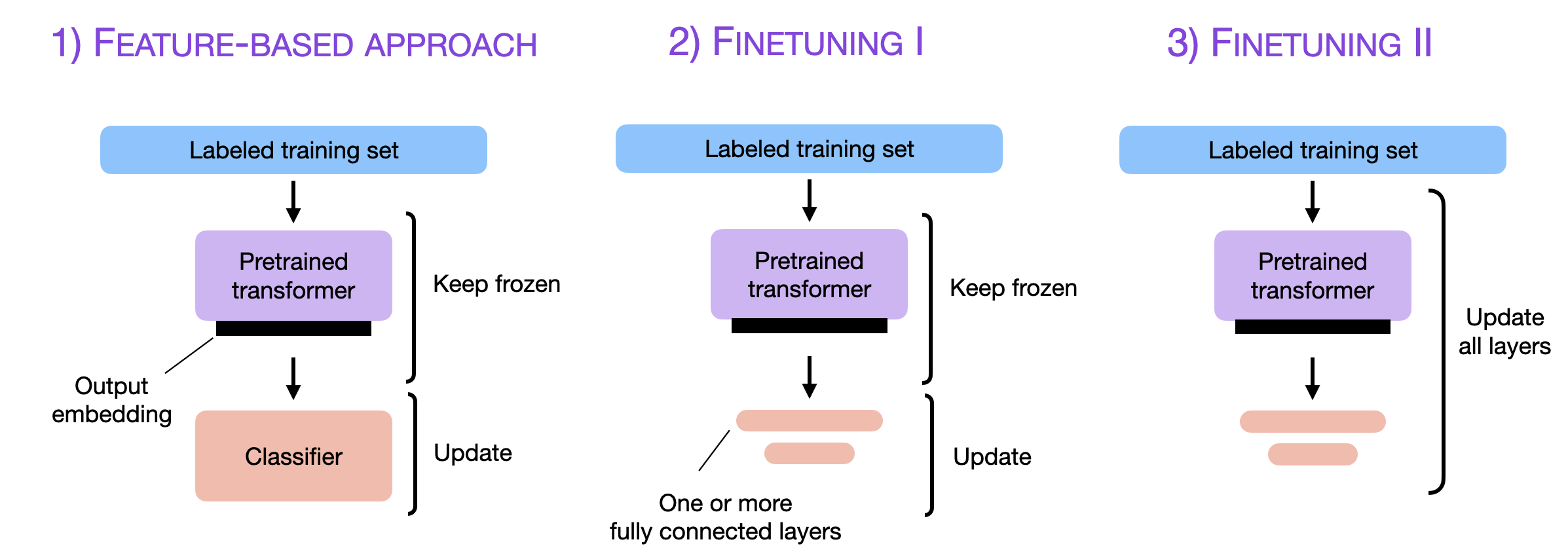

How To Use Hugging Face To Fine Tune Ollama S Local Model Beginners Finally we can export our finetuned model to ollama itself! first we have to install ollama in the colab notebook: then we export the finetuned model we have to llama.cpp's gguf formats like below: reminder to convert false to true for 1 row, and not change every row to true, or else you'll be waiting for a very time!. Inference only: ollama is designed solely for model inference. for training or fine tuning models, you will need to use tools like hugging face, tensorflow, or pytorch. run llama 3.1 locally. Full parameter fine tuning is a method that fine tunes all the parameters of all the layers of the pre trained model. in general, it can achieve the best performance but it is also the most resource intensive and time consuming: it requires most gpu resources and takes the longest. peft, or parameter efficient fine tuning, allows one to fine. Ollama excels at running pre trained models. however, it also allows you to fine tune existing models for specific tasks. let’s delve into the steps required to fine tune a model and run it.

Introduction To юааollamaюаб таф Part 1 Using юааollamaюаб To Run Llmтащs юааlocallyюаб By Full parameter fine tuning is a method that fine tunes all the parameters of all the layers of the pre trained model. in general, it can achieve the best performance but it is also the most resource intensive and time consuming: it requires most gpu resources and takes the longest. peft, or parameter efficient fine tuning, allows one to fine. Ollama excels at running pre trained models. however, it also allows you to fine tune existing models for specific tasks. let’s delve into the steps required to fine tune a model and run it. There, you can scroll down and select the “llama 3 instruct” model, then click on the “download” button. after downloading is completed, close the tab and select the llama 3 instruct model by clicking on the “choose a model” dropdown menu. type a prompt and start using it like chatgpt. To run our fine tuned model on ollama, open up your terminal and run: ollama pull llama brev. remember, llama brev is the name of my fine tuned model and what i named my modelfile when i pushed it to the ollama registry. you can replace it with your own model name and modelfile name. to query it, run:.

Bringing Your Fine Tuned Mlx Model To Life With Ollama Integration By There, you can scroll down and select the “llama 3 instruct” model, then click on the “download” button. after downloading is completed, close the tab and select the llama 3 instruct model by clicking on the “choose a model” dropdown menu. type a prompt and start using it like chatgpt. To run our fine tuned model on ollama, open up your terminal and run: ollama pull llama brev. remember, llama brev is the name of my fine tuned model and what i named my modelfile when i pushed it to the ollama registry. you can replace it with your own model name and modelfile name. to query it, run:.

Understanding Parameter Efficient Finetuning Of Large Language Models

Comments are closed.