Multi Layer Neural Networks With Sigmoid Functionвђ Deep Learning For

Deep Learning Feed Forward Neural Networks Ffnns By Mohammed Terry Multi layer neural networks: an intuitive approach. alright. so we’ve introduced hidden layers in a neural network and replaced perceptron with sigmoid neurons. we also introduced the idea that non linear activation function allows for classifying non linear decision boundaries or patterns in our data. A sigmoid function can take any real number as an input. so, the domain of a sigmoid function is ( ∞, ∞). the output of a sigmoid function is a probability value between 0 and 1. so, the range of a sigmoid function is (0, 1). 4. the value of a sigmoid function at x=0 is 0.5.

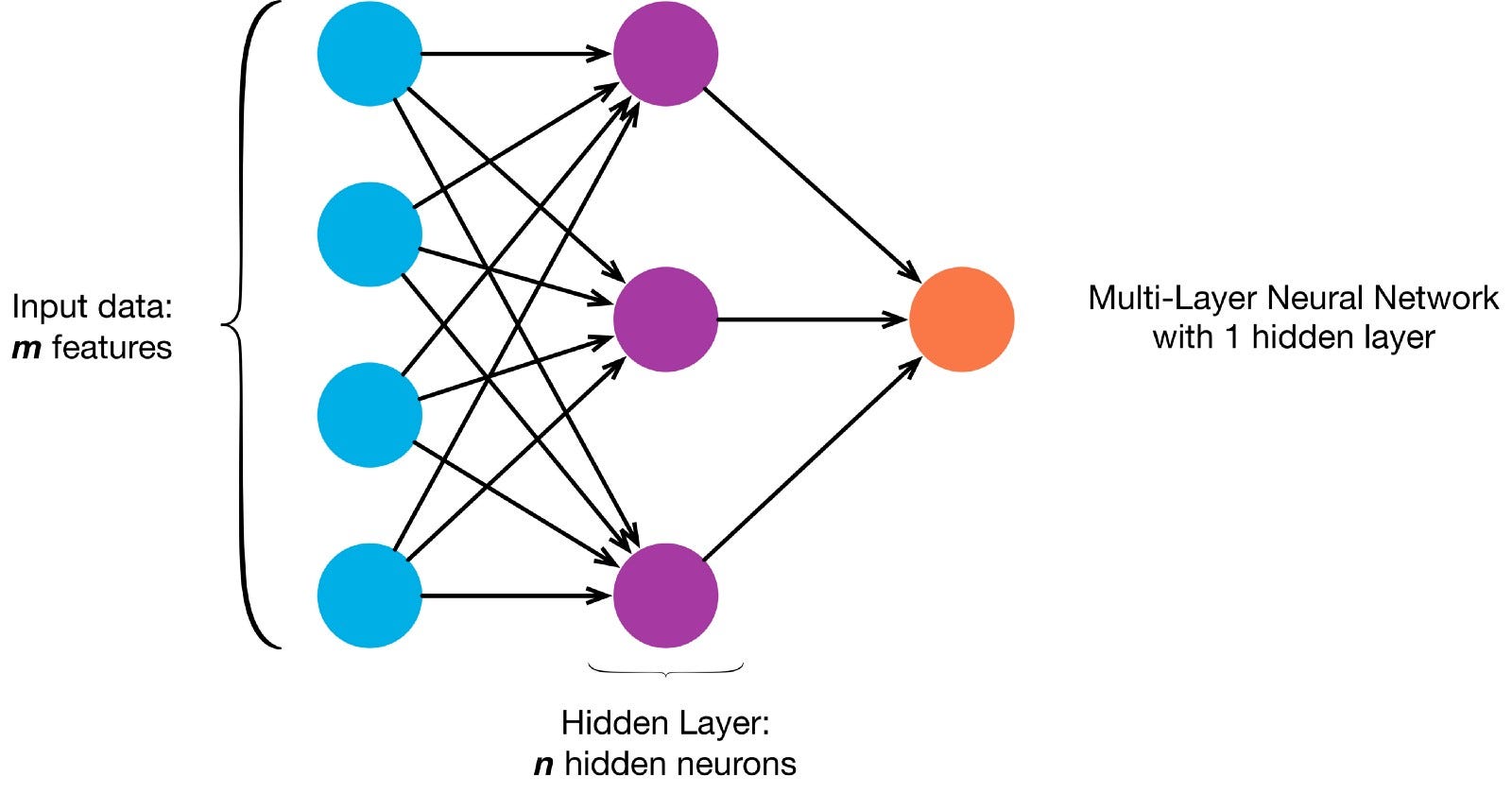

Multi Layer Neural Networks With Sigmoid Functionвђ Deep Lear For example, here is a small neural network: in this figure, we have used circles to also denote the inputs to the network. the circles labeled “ 1” are called bias units, and correspond to the intercept term. the leftmost layer of the network is called the input layer, and the rightmost layer the output layer (which, in this. The keras python library for deep learning focuses on creating models as a sequence of layers. in this post, you will discover the simple components you can use to create neural networks and simple deep learning models using keras from tensorflow. let’s get started. may 2016: first version update mar 2017: updated example for keras 2.0.2, […]. Our neural network can be described as a composition of multiple functions: p(zh(h(z(x)))), where x is the input at the input layer, z is the linear transformation of x, h is the sigmoid activation function at the hidden layer, zh is the linear transformation of h, and p is the sigmoid function prediction of the model at the output layer. The sigmoid function is used as an activation function in neural networks. just to review what is an activation function, the figure below shows the role of an activation function in one layer of a neural network. a weighted sum of inputs is passed through an activation function and this output serves as an input to the next layer.

Comments are closed.