Multiple Regression In Spss Insignificant Coefficients Significant F

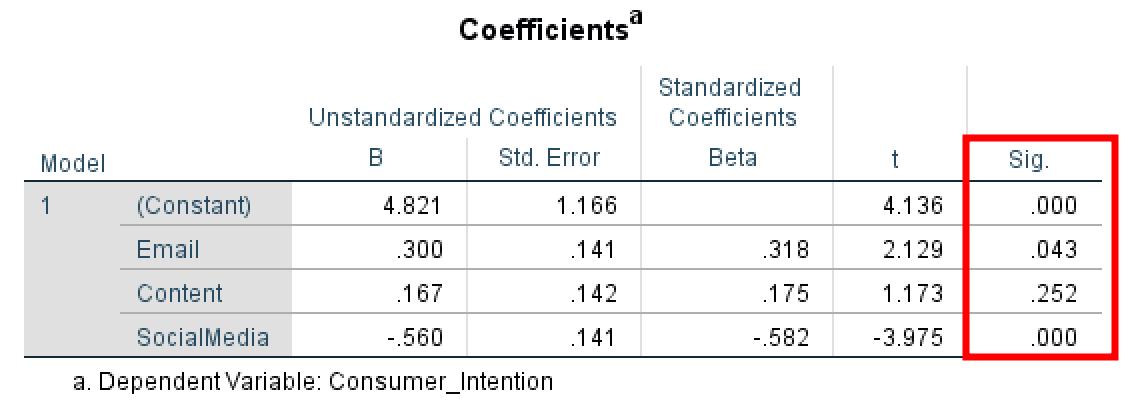

Multiple Regression In Spss Insignificant Coefficients Significant F To calculate the f test of overall significance, your statistical software just needs to include the proper terms in the two models that it compares. the overall f test compares the model that you specify to the model with no independent variables. this type of model is also known as an intercept only model. the f test for overall significance. I have conducted the multiple regressions for each predictor, however, am confused by the spss outputs. for my first test, for concealing, my f statistic is significant, (p = 0.000), however, 2 of the 3 predictors' t tests are quite insignificant (p = 0.384, p = 0.618).

Multiple Regression In Spss Insignificant Coefficients Significant F $\begingroup$ nice work! i just wonder about one specific example i ran into (on real data): logistic regression with p=10 predictors, n=500 observations (balanced response classes), largest vif < 1.5, where one ends up with significant full model likelihood ratio test (at 0.0003) and all insignificant predictors (two smallest p values at ~0.11, rest are > 0.25). Out of seven, six of the independent variables (predictors) are not significant ( p > 0.05 p > 0.05 ), but their correlation values are small to moderate. moreover, the p p value of the regression itself is significant ( p < 0.005 p < 0.005; table 2). i understand in a partial least squares analysis or sem, the weights (standardized. The f test of overall significance in regression is a test of whether or not your linear regression model provides a better fit to a dataset than a model with no predictor variables. the f test of overall significance has the following two hypotheses: null hypothesis (h0) : the model with no predictor variables (also known as an intercept only. In general, an f test in regression compares the fits of different linear models. unlike t tests that can assess only one regression coefficient at a time, the f test can assess multiple coefficients simultaneously. the f test of the overall significance is a specific form of the f test. it compares a model with no predictors to the model that.

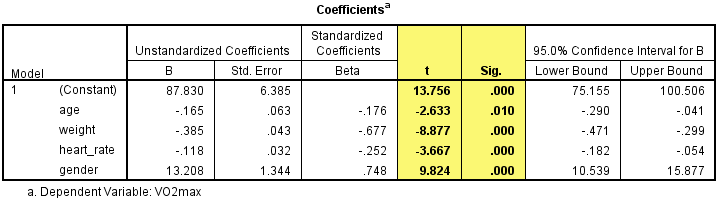

How To Perform A Multiple Regression Analysis In Spss Statistics The f test of overall significance in regression is a test of whether or not your linear regression model provides a better fit to a dataset than a model with no predictor variables. the f test of overall significance has the following two hypotheses: null hypothesis (h0) : the model with no predictor variables (also known as an intercept only. In general, an f test in regression compares the fits of different linear models. unlike t tests that can assess only one regression coefficient at a time, the f test can assess multiple coefficients simultaneously. the f test of the overall significance is a specific form of the f test. it compares a model with no predictors to the model that. Multiple regression is an extension of simple linear regression. it is used when we want to predict the value of a variable based on the value of two or more other variables. the variable we want to predict is called the dependent variable (or sometimes, the outcome, target or criterion variable). the variables we are using to predict the value. The raw regression coefficients are . partial regression coefficients. because their values take into account the other predictor variables in the model; they inform us of the pre dicted change in the dependent variable for every unit increase in that predictor. for example, positive affect is associated with a partial regression coefficient of.

How To Calculate Multiple Linear Regression Using Spss Uedufy Multiple regression is an extension of simple linear regression. it is used when we want to predict the value of a variable based on the value of two or more other variables. the variable we want to predict is called the dependent variable (or sometimes, the outcome, target or criterion variable). the variables we are using to predict the value. The raw regression coefficients are . partial regression coefficients. because their values take into account the other predictor variables in the model; they inform us of the pre dicted change in the dependent variable for every unit increase in that predictor. for example, positive affect is associated with a partial regression coefficient of.

Comments are closed.