Question Oculus Hand Tracking User Interface Unity Forum

How Oculus Uses Ai For Hand Tracking Hi everybody, i’m trying to develop a vr app using oculus integration that supports hand tracking. i want to make a simple user interface (buttons, slider and checkboxes) and use hands to interact with. i tried using uihelpers and ovrraycaster script but it didn’t work properly with hands because raycaster is wrong oriented (photo below. Hand tracking is working on the device in the quest os and during quest link. when the unity app is running and my palms are facing the headset the oculus menu buttons are visible but not the hand mesh. steps to reproduce: create new unity project. install oculus integration and xr plugin management (select oculus as provider) open any hand.

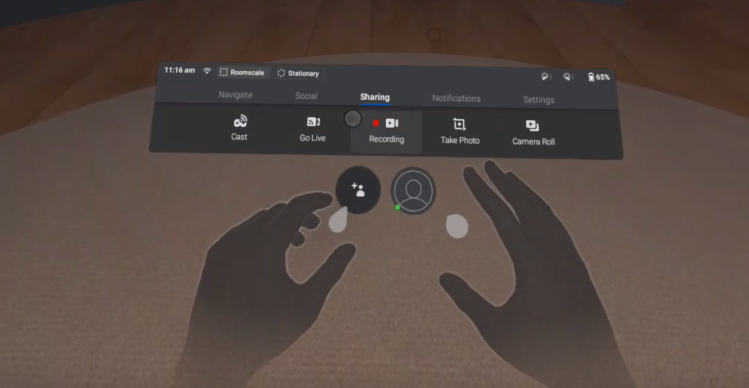

Hand Tracking In The Oculus Quest Is Here Crosscomm The system needs to track the user hands, and i chose to use the meta building blocks built in to achieve that. currently i’m using meta xr all in one sdk v65.0.0 package. in order to know if the hand tracking is working correctly, i use the ‘synthetic hands’ building block from the same package. now comes the funny part: i noticed that. Claytonious february 10, 2024, 3:22pm 1. we’re using the xr hands package (and xrcontroller to some extent) to let the user manipulate things with his hands. on oculus quest, even at the default settings, the hands are smooth and the user can do delicate manipulations successfully. but on a visionpro, the hands are very jittery, especially. 1. i'm working on a unity project, trying to test the ui interaction on the quest ii with hand tracking and ray casting. i've set up a very basic scene with all the default assets ( ovrcamerarig, uihelper) and added just a button to test the ui interaction. this is what my scene looks like:. Solved. hi! i am able to build and run my app for oculus quest 2 using unity 2020.2. however, i'm trying to get the hand interaction to work. in the handinteractiontrainscene from the oculus integration package, i tells me i need to enable hand tracking. i've searched around a bit and this should be solved by setting the "hand tracking support.

Question Oculus Hand Tracking User Interface Unity Forum 1. i'm working on a unity project, trying to test the ui interaction on the quest ii with hand tracking and ray casting. i've set up a very basic scene with all the default assets ( ovrcamerarig, uihelper) and added just a button to test the ui interaction. this is what my scene looks like:. Solved. hi! i am able to build and run my app for oculus quest 2 using unity 2020.2. however, i'm trying to get the hand interaction to work. in the handinteractiontrainscene from the oculus integration package, i tells me i need to enable hand tracking. i've searched around a bit and this should be solved by setting the "hand tracking support. All the documentation looks like it is in place on the unity xr input page for hand tracking. however, with the oculus quest 2 i return 3 trackeddevice xr nodes (left controller, right controller and headset), but 0 handtracking nodes with getdeviceswithcharacteristics. I've tried unity 2020.3.0 and 2020.3.26 oculus app version 35.0.0.73.175 unity oculus integration version 35.0 quest headset version 22310100490800000 i can't imagine what to try next. i feel like i've tried everything. i even tried creating a new unity project. imported in the oculus samples, and tried the hand tracking train sample.

Comments are closed.