Recurrent Neural Networks Sequence Models Natural Language Processing

Sequence Modeling Using Gated Recurrent Neural Networks Deepai These examples show that there are different applications of sequence models. sometimes both the input and output are sequences, in some either the input or the output is a sequence. recurrent neural network (rnn) is a popular sequence model that has shown efficient performance for sequential data. recurrent neural networks (rnns). Language models. the aim for a language model is to minimise how confused the model is having seen a given sequence of text. it is only necessary to train one language model per domain, as the language model encoder can be used for different purposes such as text generation and multiple different classifiers within that domain.

Simple Explanation Of Recurrent Neural Network Rnn In the fifth course of the deep learning specialization, you will become familiar with sequence models and their exciting applications such as speech recognition, music synthesis, chatbots, machine translation, natural language processing (nlp), and more. by the end, you will be able to build and train recurrent neural networks (rnns) and. Natural language processing (nlp) is a crucial part of artificial intelligence (ai), modeling how people share information. in recent years, deep learning approaches have obtained very high performance on many nlp tasks. in this course, students gain a thorough introduction to cutting edge neural networks for nlp. Recurrent neural network. in rnns, x (t) is taken as the input to the network at time step t. the time step t in rnn indicates the order in which a word occurs in a sentence or sequence. the hidden state h (t) represents a contextual vector at time t and acts as “ memory ” of the network. There are 3 modules in this course. in course 3 of the natural language processing specialization, you will: a) train a neural network with glove word embeddings to perform sentiment analysis of tweets, b) generate synthetic shakespeare text using a gated recurrent unit (gru) language model, c) train a recurrent neural network to perform named.

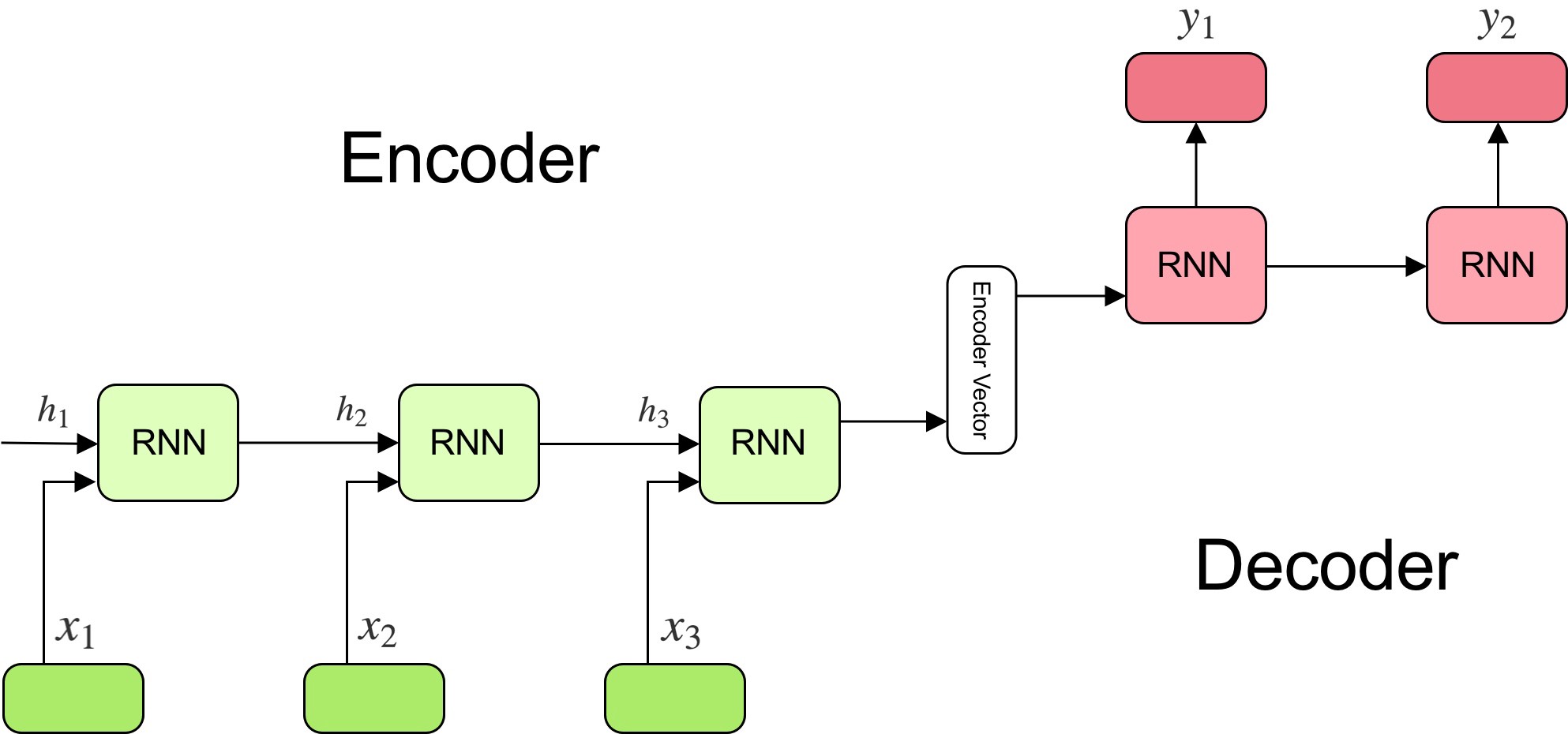

Activity Sequence And Recurrent Neural Network Rnn Model Download Recurrent neural network. in rnns, x (t) is taken as the input to the network at time step t. the time step t in rnn indicates the order in which a word occurs in a sentence or sequence. the hidden state h (t) represents a contextual vector at time t and acts as “ memory ” of the network. There are 3 modules in this course. in course 3 of the natural language processing specialization, you will: a) train a neural network with glove word embeddings to perform sentiment analysis of tweets, b) generate synthetic shakespeare text using a gated recurrent unit (gru) language model, c) train a recurrent neural network to perform named. Abstract. in the modern age of information and analytics, natural language processing (nlp) is one of the most important technologies out there. making sense of complex structures in language and deriving insights and actions from it is crucial from an artificial intelligence perspective. in several domains, the importance of natural language. Recurrent neural networks (rnns) are neural network architectures with hidden state and which use feedback loops to process a sequence of data that ultimately informs the final output. therefore, rnn models can recognize sequential characteristics in the data and help to predict the next likely data point in the data sequence.

The Attention Mechanism In Natural Language Processing Seq2seq Abstract. in the modern age of information and analytics, natural language processing (nlp) is one of the most important technologies out there. making sense of complex structures in language and deriving insights and actions from it is crucial from an artificial intelligence perspective. in several domains, the importance of natural language. Recurrent neural networks (rnns) are neural network architectures with hidden state and which use feedback loops to process a sequence of data that ultimately informs the final output. therefore, rnn models can recognize sequential characteristics in the data and help to predict the next likely data point in the data sequence.

Comments are closed.