What Is Backpropagation

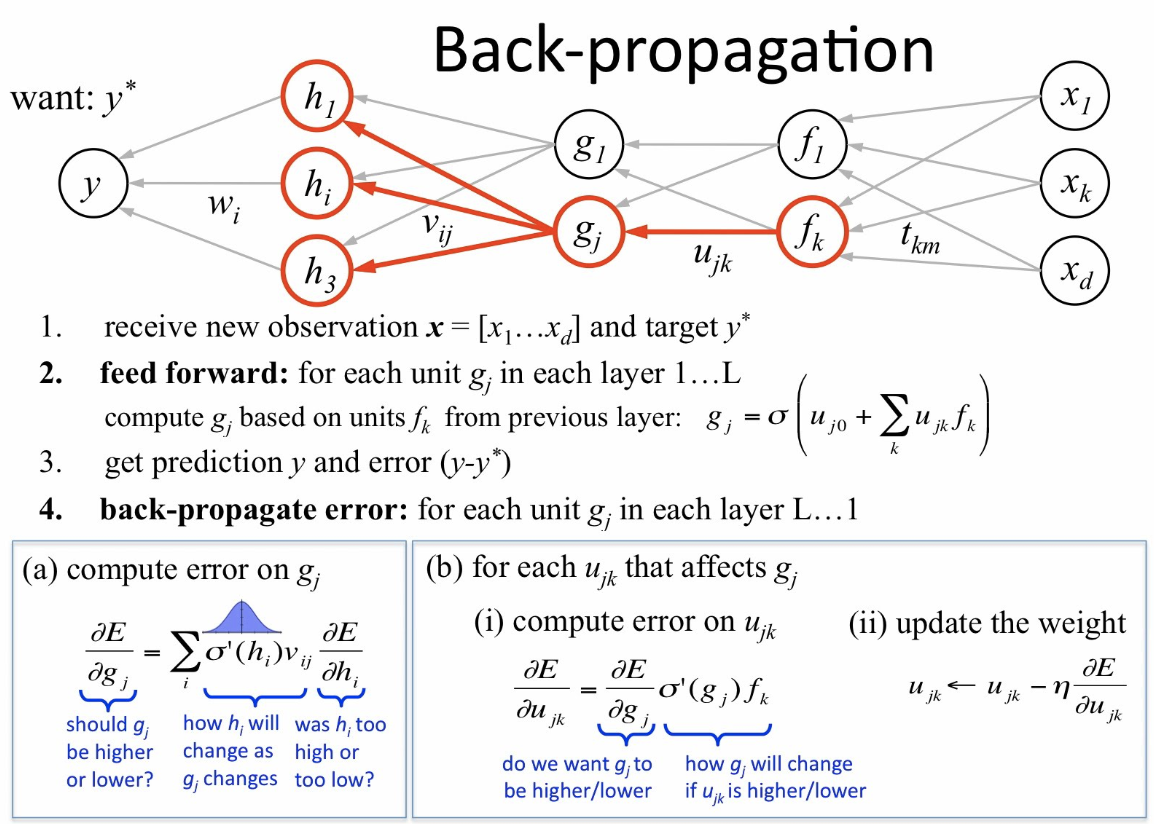

Backpropagation Backpropagation Is A Commonly Usedвђ By Jorge Leonel Backpropagation is a technique for computing the gradient of a loss function with respect to the weights of a neural network for a single input–output example. it uses the chain rule and dynamic programming to avoid redundant calculations and efficiently update the network parameters. Backpropagation algorithm is probably the most fundamental building block in a neural network. it was first introduced in 1960s and almost 30 years later (1989) popularized by rumelhart, hinton and williams in a paper called “learning representations by back propagating errors”. the algorithm is used to effectively train a neural network.

Part 2 Gradient Descent And Backpropagation Machine L Vrogue Co Backpropagation in a neural network is designed to be a seamless process, but there are still some best practices you can follow to make sure a backpropagation algorithm is operating at peak performance. select a training method. the pace of the training process depends on the method you choose. Learn what backpropagation is, why it is crucial in machine learning, and how it works. see the advantages, steps, and examples of backpropagation algorithm in neural networks. Backpropagation is a method to calculate how changes to neural network weights affect model accuracy. it is essential to train neural networks using gradient descent algorithms and supervised learning. Backpropagation is a method for calculating derivatives inside deep feedforward neural networks to train them with supervised learning algorithms. learn how backpropagation works, see the formula, and follow an example calculation with a simple network.

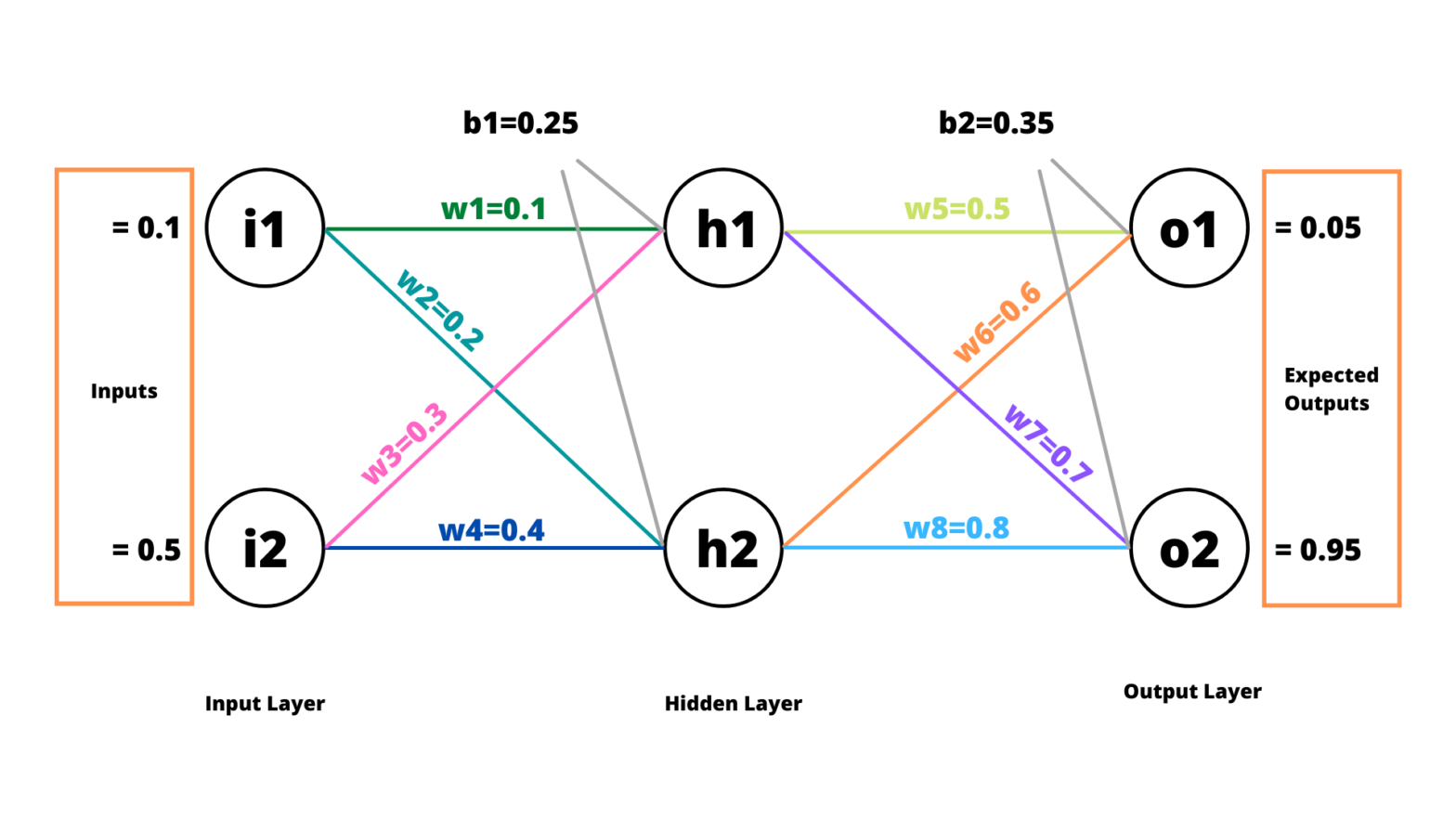

Backpropagation Example With Numbers Step By Step вђ A Not So Random Walk Backpropagation is a method to calculate how changes to neural network weights affect model accuracy. it is essential to train neural networks using gradient descent algorithms and supervised learning. Backpropagation is a method for calculating derivatives inside deep feedforward neural networks to train them with supervised learning algorithms. learn how backpropagation works, see the formula, and follow an example calculation with a simple network. Backpropagation identifies which pathways are more influential in the final answer and allows us to strengthen or weaken connections to arrive at a desired prediction. it is such a fundamental component of deep learning that it will invariably be implemented for you in the package of your choosing. Learn what backpropagation is and how it works, along with its advantages and limitations, in this tutorial. apply backpropagation to a real world scenario of image recognition using the mnist dataset and keras library.

A Step By Step Forward Pass And Backpropagation Example Backpropagation identifies which pathways are more influential in the final answer and allows us to strengthen or weaken connections to arrive at a desired prediction. it is such a fundamental component of deep learning that it will invariably be implemented for you in the package of your choosing. Learn what backpropagation is and how it works, along with its advantages and limitations, in this tutorial. apply backpropagation to a real world scenario of image recognition using the mnist dataset and keras library.

What Is Backpropagation Definition From Techopedia

Comments are closed.